Scaling Battlesnake testing with Kubernetes

November 14, 2021

Note: this article assumes familiarity with kubernetes related keywords.

A friend and I are using Battlesnake as a platform for learning new tech skills. In my case, it is to continue to improve my DevOps technical skillset, mainly to finally take the plunge and learn Kubernetes.

Battlesnake is a multiplayer version of the classic game snake, where game engine sends HTTP requests to your web server. Your response simply needs to be up, down, left or right within a deadline of 500ms. How you achieve it and with which technologies are decisions all up to you.

As you iterate on your snake it becomes essential to test it frequently, both to avoid regressions such as causing your snake to prefer to headbutt snakes stronger than it, and to test whether your changes have made it a stronger contender or not.

Initially we achieved this by pushing the snake to our production EC2 instance hosted in AWS us-west-2 (as close as possible to the Battlesnake servers, since every millisecond of latency counts towards the 500ms deadline), however this process was slow, and made even slower by our move to graviton instances.

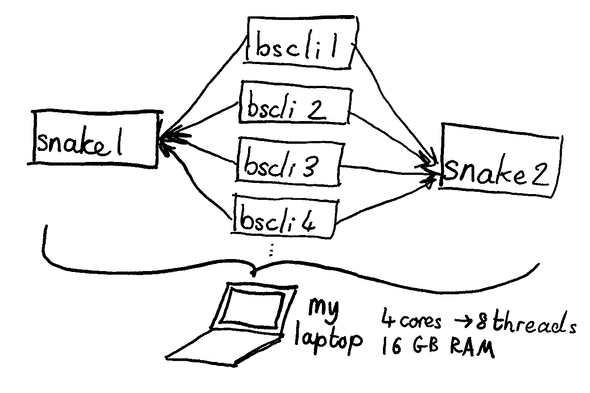

The Battlesnake team provides a command line version of their rules engine which allows for running of local games. This means that we could run our snake testing locally, ensuring our snake performs the basics (do not hit walls, do not hit yourself, do not run out of health, etc), along with seeing how it performs in a game against another snake (or potentially even against itself).

This is still limiting when it comes to testing two snake variants. You can only easily run the CLI sequentially. If your snakes are also running on the same computer, then they will compete for the same resources (CPU, RAM) that the CLI is using.

Ideally we should be able to run multiple instances of the CLI in parallel, along with multiple, load balanced instances of each snake variant. Here is where kubernetes comes in as a solution. Whilst this can be achived without kubernetes (there are options such as Hashicorp Nomad), k8s offers multiple features we need in a single package whilst also providing a great opportunity for learning.

A kubernetes based approach

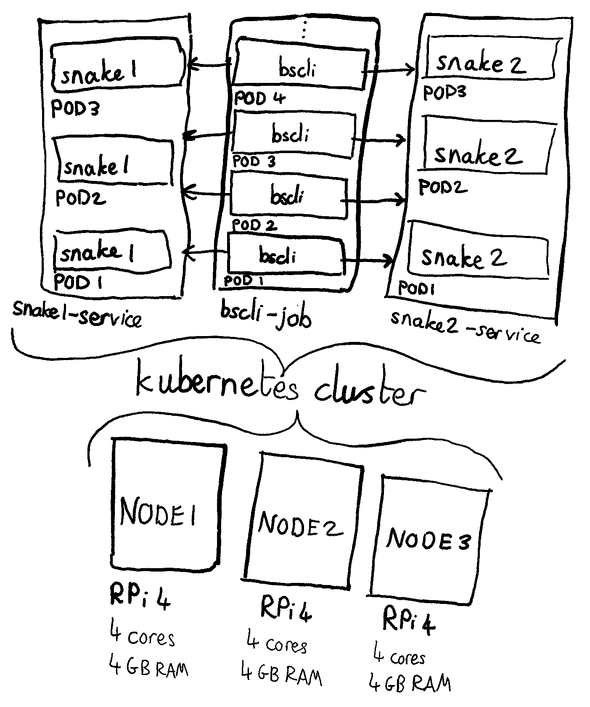

Kubernetes provides an opportunity to orchestrate our software (packaged as containers) across a single or multiple nodes. It has existing concepts which aid in achieving outcomes such as service discovery, load balancing and parallelisation of jobs.

Here is what our setup looks like. It runs k3s on a Raspberry Pi based cluster:

Each snake is containerised and deployed to a Docker registry hosted alongside the cluster.

For each snake, we define a deployment. This maintains a replicaset underneath which creates a set of pods running the snake’s webserver. We also define a service which allows each web server’s pod to be reachable within the cluster. To allow reaching it from outside the cluster, we define it as type NodePort and hardcode a port such as 30000, in case we wish to curl it manually.

Here’s a sample:

apiVersion: apps/v1

kind: Deployment

metadata:

name: foobar1

namespace: snakepit

labels:

app: foobar1

spec:

replicas: 3

selector:

matchLabels:

app: foobar1

template:

metadata:

labels:

app: foobar1

spec:

containers:

- name: foobar1

image: "our-registry-url:5000/snake-foobar1:testing-arm"

ports:

- containerPort: 8111

imagePullPolicy: Always

---

apiVersion: v1

kind: Service

metadata:

labels:

app: foobar1

name: foobar1-service

namespace: snakepit

spec:

selector:

app: foobar1

type: NodePort

ports:

- protocol: TCP

port: 8111

targetPort: 8111

nodePort: 30000The CLI is also containerised. We define a k8s Job where the command is specified to include all CLI args required to include the snakes also deployed within the cluster. We can even define these using the name of the service, since k8s will resolve DNS for us within the cluster (for example: http://foobar1-service:8111). Here’s a sample:

apiVersion: batch/v1

kind: Job

metadata:

name: bscli

namespace: snakepit

spec:

completions: 4

completionMode: Indexed

parallelism: 2

template:

spec:

containers:

- name: bscli

image: "our-registry-url:5000/bscli:testing-arm"

imagePullPolicy: IfNotPresent

command:

[

"./battlesnake",

"play",

"-W",

"11",

"-H",

"11",

"--name",

"foobar1",

"--url",

"http://foobar1-service:8111",

"--name",

"pastaz2",

"--url",

"http://pastaz2-service:8111",

"-v",

"-t",

"500",

]

restartPolicy: Never

backoffLimit: 1By adjusting completions and parallelism we can decide how large our testing round should be, along with how many games should run at the same time. In an ideal world: parallelism == completions, however my cluster has limited resources and by running too many pods the end result will slow down the response time of the snake webservers, ending up in a situation where it takes longer to complete a round of testing. By constraining parallelism, we can ensure that we do not saturate the cluster’s resources.

Future work

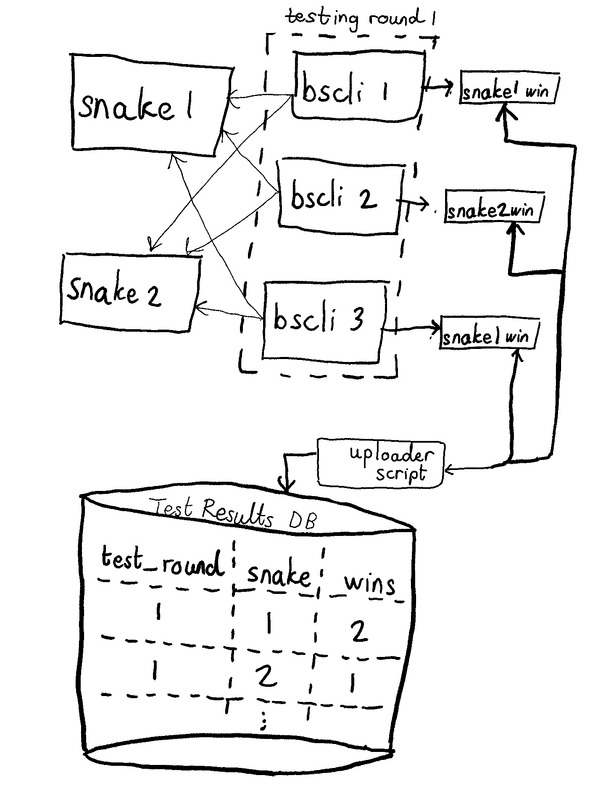

Our work is not finished yet. Right now, this system does not track the number of wins per snake in a testing round, which is the entire point of testing variants against each other. Here’s what we would need to achieve:

To achieve this, there are number of options:

- Tail and grep the logs of the CLI pods (the last line printed by the CLI states the winner).

This option is quick and dirty, however has some constraints, mainly that we would be doing it after the testing round is complete. If we had to interleave the logs from multiple pods from different testing rounds we would not be able to tell which result belongs to which testing round.

- Patch the CLI to write the results to a file and upload it to a webserver by including a script of some sort which will run after the CLI exits.

For this, I forked and patched the CLI to export the details of the game to a line delimited JSON file. I also opened a PR which has started the RFC process around this functionality.

The main issue here is that I would have to maintain a fork of the CLI and would I need to run the script after the game ends, potentially complicating how the container is built and how the current system of overriding the container’s command is done.

- Divert the CLI Pods’s logs to another Pod by streaming them to a sidecar, followed by uploading the result.

This would be the most “k8s native” way of reaching the goal. This means that there is no need to patch the CLI (for our current needs at least, which is simply to answer the question “did the snake win?”) as that is easily parseable from the current CLI’s output, whilst also keeping the script which will upload the result in a separate container within the Pod, keeping the CLI’s container clean and simple.

Once a solution is picked and implemented I’ll be back with another blog post. In the meantime, feel free to checkout our progress on the bantersnake team page!

Sidenote: The diagrams have been illustrated by myself on a ReMarkable2. Its a great piece of hardware but my lack of decent handwriting is pretty clear. I am hoping this will improve over time.